The old adage “You get what you pay for” doesn’t fully apply to equipment automation interfaces… more accurately, you get what you require, and then what you pay for!

The old adage “You get what you pay for” doesn’t fully apply to equipment automation interfaces… more accurately, you get what you require, and then what you pay for!

This is especially true when considering the range of capability that may be provided with an equipment supplier’s implementation of the EDA (Equipment Data Acquisition, also known as Interface A) standards. Not only is it possible to be fully compliant with the standard while delivering an equipment metadata model that contains very little useful information, the standards themselves are also silent on the topics of Performance and Data Quality. So you must take extra care to state these requirements and expectations in your purchase specifications if you expect the resulting interface to support the demands of your factory’s data analysis and control applications. Moreover, to the extent these requirements can be tested, you should describe the test methods and tools that you will use in the acceptance process to minimize the chance of ugly surprises when the equipment is delivered.

We have covered the importance of and process for creating robust purchase specifications in a previous posting. This post will focus specifically on aspects of Performance and Data Quality within that context.

Scope of Performance and Data Quality Requirements

From a scope standpoint, Performance and Data Quality requirements are found in a number of sections in an automation specification. The list below is just a starting point suitable for any advanced wafer fab – your needs may extend and exceed these significantly.

Here are some sample requirements that pertain to the computing platform for the EDA interface software:

- The interface computer should have the capability of a 4-core Intel i5 or i7 or better, with processing speed of 2+ GHz, 8 GB of RAM, and 500 GB of persistent storage with at least 50% available at all times.

- The equipment must monitor key performance parameters of the EDA computing platform such as CPU utilization (%), memory utilization (GB, %), disk utilization (GB, %) and access rate, etc. using system utilities such as Perfmon (for Windows systems) and store this history either in a log file or in some part of the equipment metadata model.

- The network interface card must support 1 GB per second (or faster) communications.

In the area of equipment model content, the following requirements are directly related to interface performance and data quality:

- The equipment should make the EDA computing platform performance parameters available as parameters of an E120 logical element that represents the EDA interface software itself.

- The supplier must provide a written description of the update rates, recommended sampling intervals, normal operating ranges and behaviors, and high/low/rate-of-change limits for all key process parameters. These will be used to design data quality filters in the data path between the equipment and the consuming applications/users.

- Equipment parameters provided through the EDA interface must exhibit a number of data quality characteristics, including, but not limited to: an internal sampling/update rate sufficient to represent the underlying signal accurately; timing of trace reports that is consistent with the sampling interval within +/- 1.0%; values in adjacent trace reports must contain then-current values at the specified sampling interval; and rejection of obvious outliers.

Advanced users of the EDA standards are now raising their expectations for the equipment to provide self-monitoring and diagnosis capability in the form of built-in data collection plans (DCPs), as expressed in some of the following requirements:

- The supplier must provide built-in DCPs to support common equipment performance monitoring, diagnostic, and maintenance processes that are well known to the supplier. Documentation for these DCPs must define their purpose, activation conditions, interface bandwidth consumed, and the types of analysis the collected data enables.

- The supplier must describe the operating conditions that can lead to a PerformanceWarning situation for the EDA interface.

- The supplier must describe the algorithms used to deactivate DCPs under PerformanceWarning conditions. These might include LIFO (i.e., the last DCP activated is the first to be deactivated), decreasing order of bandwidth consumed or “size” (in terms of total # of parameters and # of trace/event requests), etc.

Because of the power and complexity of the DCP structure defined in the EDA standards, it is not sufficient to specify the raw communications performance requirement as a small number of isolated criteria, such as total bandwidth (in parameters per second) or minimum sampling interval. Rather, since the EDA interface must support a variety of data collection client demands for a wide range of production equipment, these requirements should be expressed as combinations of sampling interval, # parameters per DCP, # of simultaneously active DCPs, group size, buffering interval, response time for ad hoc “one-shot” DCPs, maximum latency of event generation after the related equipment condition occurred, consistency of timestamps in trace reports with the specified sampling interval, and perhaps others.

Moreover, some equipment types may have more stringent performance requirements than others, depending on the criticality of timely data for the consuming applications… so there may be process-specific performance requirements as well.

Measurement and Testing

Methods for measuring and testing the above requirements should also be described in the purchase specifications so the equipment suppliers can know they are being successfully addressed during the development process and can demonstrate compliance before and after shipping the equipment. Clarity at this phase saves time and expense later on.

Examples of such requirements include:

- The supplier must test the EDA interface across the full range of performance criteria specified above and provide reports documenting the results.

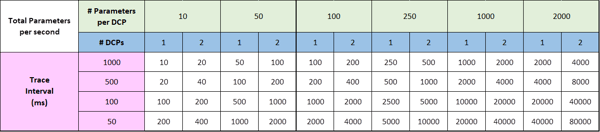

- An earlier requirement states that the EDA interface must be capable of reporting at least 2000 parameters at a sampling interval of 0.1 seconds (10Hz) with a group size of 1, for a total data collection capacity (bandwidth) of 20,000 parameters per second. In addition to this overall bandwidth capability, the supplier must demonstrate that this performance is possible over a range of specific data collection deployment strategies, meaning different #s and sizes of DCPs, different sampling intervals, group sizes, etc. without causing the EDA interface to reach one of its “Performance Warning” states or overstress its computing platform. To this end, all combinations of the following data collection configuration settings must be run for at least 15 seconds each; assuming the equipment has n processing modules:

- Trace intervals (in seconds): 1, 0.5, 0.2, 0.1 (and 0.05 if possible)

- # of parameters per DCP: 10, 50, 100, 250, 500, 1000 (and 2000 if possible)

- # of DCPs: 1, 2, 3, … to n

- Group size: 10, 5, 2, 1

- The test client should be run on a separate computing platform with sufficient computing power to “stay ahead” of the EDA interface computer; in other words, the EDA interface should never have to wait on the client system.

- Test reports should indicate the start and stop time of each iteration (i.e., one combination of the above settings), and verify that the timestamps of the data collection reports sent by the EDA interface are within +/- 1% of the value expected if the samples were collected exactly at the specified trace interval.

This approach is shown in tabular form for a 2-chamber tool (see below); since Group Size does not (or should not) impact the effective parameters per second rate, it is not shown in the table.

- A summary report for all performance tests that show acceptable message generation and transmission timing across the full range of data collection test criteria must be available.

- Detailed SOAP logs for specific performance tests must be available on request.

In Conclusion

We hope you now have some appreciation for the importance of solid requirements in this area, and can accurately assess how well your current purchase specifications express your actual needs. If you want to know more about a well-defined process for improving your specifications, or have any other questions regarding the status and outlook of the EDA standards, and how they can be implemented, please contact us.