Earlier this quarter (October 2022) the SEMI Smart Manufacturing Council conducted one of its regular meetings with factory stakeholders to determine what sort of SEMI-sponsored activities could accelerate this important industry initiative. One focus area of the discussion was getting more and better data out of the sub-fab, since the performance and integration of these components is becoming more important for many of the leading manufacturers. The related, familiar topic of achieving better visibility into equipment and process behavior was also raised.

In that context, as it often does, the role of industry standards came up as a key enabling technology. The following question was posed: “Are the current standards sufficient to support the broad range of smart manufacturing use cases that factories envision, or does more need to be done? Specifically, since the Information and Control Committee is working on the Freeze 3 version of the EDA (Equipment Data Acquisition, also known as Interface A) suite of standards, are there problems that need to be addressed to improve the adoption of usage of these standards?”

This question triggered a lively (but non-convergent!) conversation among the participants in the meeting, many of whom have years (no, decades) of experience working in the standards community. Before long, it became clear that the group had no clear answer to these questions, and that more information from the actual user community was needed. Someone suggested that the Council pose the questions to a broader audience in survey form, and this proposal was enthusiastically accepted. Several of us volunteered to generate a draft survey for SEMI’s review and refinement, which got the activity off to a running start.

Given this opportunity to connect directly with the ultimate beneficiaries of SEMI Standards, there was a temptation to broaden the survey’s scope to cover other topics of interest in the smart manufacturing domain. Cognizant of the fact that the longer surveys have a lower chance of being returned with useful data, however, the group largely resisted this temptation, including the most important questions in an easy-to-answer format, leaving room for optional additional information in the responses for those so inclined.

The final survey was issued by SEMI around October 21,2022 with a requested response date of November 15 (which has been extended to gather more feedback). Rather than repeat the entire content here, I include the following excerpts from the introduction and the table of contents:

The purpose of this questionnaire is to assess the status of the industry’s experience with and impressions of the SEMI EDA (Equipment Data Acquisition) standards suite to guide current and future initiatives that improve its capabilities, communicate implementation best practices, and foster broader adoption to realize the vision of SEMI’s Smart Manufacturing Community.

Respondents to this questionnaire should include companies that have already deployed the EDA standards in volume production as well as those that have yet to do so. Respondents in the latter category can simply skip the questions about current usage/issues.

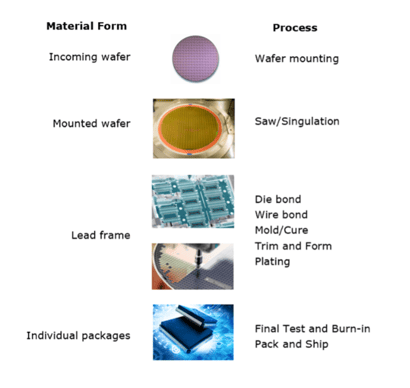

The target audience was originally thought to be stakeholders in 300mm wafer fabs, but with the growing interest in enhanced data collection and automation for backend (packaging, assembly, and test) factories AND growth in the 200mm wafer fab segment (including new models of 200mm manufacturing equipment), it has been broadened to include these domains as well.

Most questions are posed in multiple choice format, but additional detail is always welcome in the Comments fields where appropriate.

SEMI will compile the responses and share the result with the industry while preserving the anonymity of individual responses.

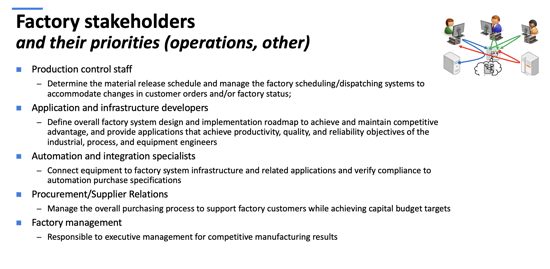

- Manufacturing Stakeholders

- Manufacturing KPIs (Operational Performance)

- Manufacturing Application Support

- Automation Requirements and Equipment Acceptance

- Current (or intended) Usage

- Issues and Expectations

- Other Data Collection Needs

Appendix – Stakeholders and Acronyms

Moreover, at this writing, the link to the on-line survey is still active here:

à SEMI Equipment Data Acquisition / Interface A Standards Survey – Questionnaire on Usage, Requirements, Expectations, and Issues.

Finally, if you are interested in the details of the survey, and especially if you would like to provide your inputs directly to SEMI, you should contact Mark da Silva, Chair, SEMI Smart Manufacturing, Global Executive Committee, at mdasilva@semi.org.

And as always, to discuss your company’s specific Smart Manufacturing journey and to understand how we at Cimetrix by PDF Solutions can help, click on the contact button below. We look forward to hearing from you!

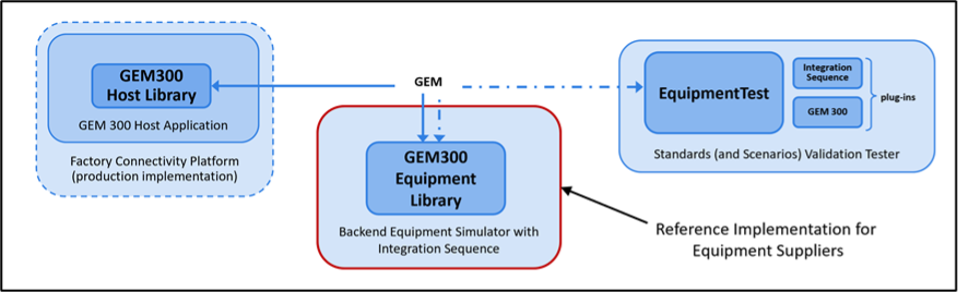

The second key innovation is basing the messages that constitute this sequence on existing, mature SEMI GEM 300 standards, thereby reducing (and eventually eliminating) the need for custom implementation software. Amazingly, except for the need to support “nested carriers,” realizing the integration sequence requires almost no modifications to the existing GEM 300 standards.

The second key innovation is basing the messages that constitute this sequence on existing, mature SEMI GEM 300 standards, thereby reducing (and eventually eliminating) the need for custom implementation software. Amazingly, except for the need to support “nested carriers,” realizing the integration sequence requires almost no modifications to the existing GEM 300 standards. The fourth innovation is more procedural than technical: by openly sharing this design with the industry standards community, we believe it will be enhanced and further generalized by other assembly and packaging thought leaders, increasing the level and sophistication of overall automation capability while lowering integration and operation costs across the industry.

The fourth innovation is more procedural than technical: by openly sharing this design with the industry standards community, we believe it will be enhanced and further generalized by other assembly and packaging thought leaders, increasing the level and sophistication of overall automation capability while lowering integration and operation costs across the industry.

We’re drowning in data but still thirsting for wisdom… This is a paraphrase of what one of the virology doctors interviewed at the beginning of the COVID-19 pandemic said about the challenges they faced in charting a course of action using the myriad volumes of data being collected around the world. The sentiment reminded me that in an entirely separate but no less complex domain: electronics manufacturers face similar challenges using data from the thousands of disparate sources in a factory to make the decisions that ultimately determine the viability of their enterprises.

We’re drowning in data but still thirsting for wisdom… This is a paraphrase of what one of the virology doctors interviewed at the beginning of the COVID-19 pandemic said about the challenges they faced in charting a course of action using the myriad volumes of data being collected around the world. The sentiment reminded me that in an entirely separate but no less complex domain: electronics manufacturers face similar challenges using data from the thousands of disparate sources in a factory to make the decisions that ultimately determine the viability of their enterprises.

Near the end of January for almost two decades, the companies that form the Automation Network Dresden (AND) have hosted a gathering of semiconductor automation professionals in the picturesque setting of Dresden, Germany. The chill of winter during this event has always been in stark contrast to the warmth of the reception the speakers and participants enjoy at this event. The big question this year was “how could the organizers possibly maintain this tradition amid the COVID-19 pandemic and its travel restrictions?"

Near the end of January for almost two decades, the companies that form the Automation Network Dresden (AND) have hosted a gathering of semiconductor automation professionals in the picturesque setting of Dresden, Germany. The chill of winter during this event has always been in stark contrast to the warmth of the reception the speakers and participants enjoy at this event. The big question this year was “how could the organizers possibly maintain this tradition amid the COVID-19 pandemic and its travel restrictions?"

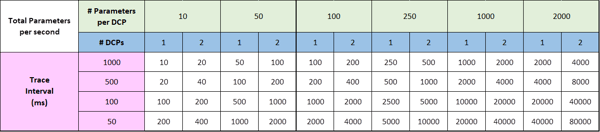

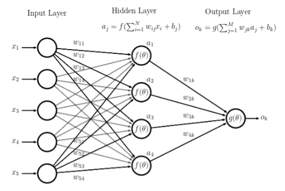

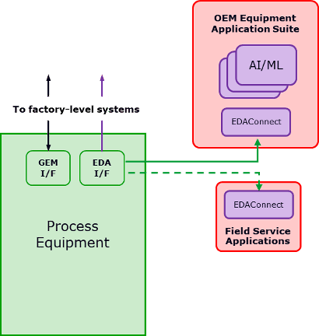

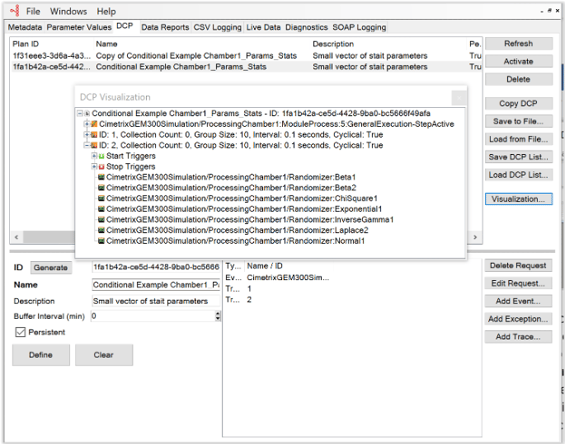

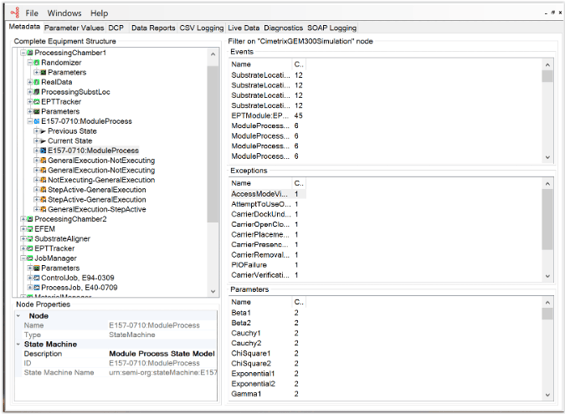

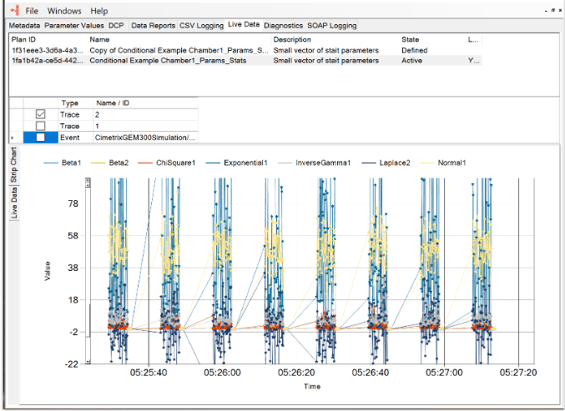

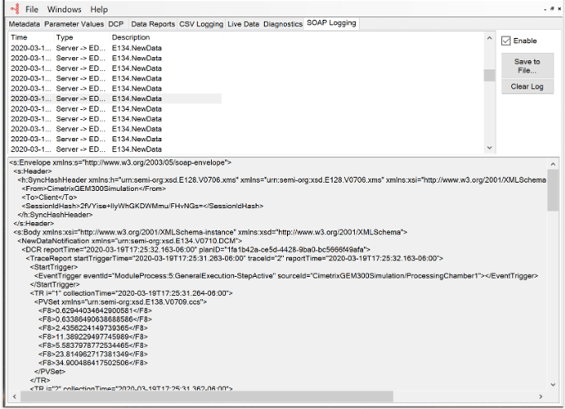

The EDA standards also include a provision for “built-in DCPs” (DCP = Data Collection Plan) which can be shipped with the equipment and protected from accidental deletion at the factory site. These DCPs could be crafted by the equipment supplier to directly feed whatever value-added applications the supplier chose to develop, whether these resided on a computer local to the equipment in the fab, on portable computers used by field service engineers to diagnose problems, or on remote cloud-based systems allowed to connect via secure EDA-defined URLs. This flexibility opens up a wide range of application types, from those that embed equipment-specific algorithms to generic Machine Learning frameworks… the possibilities are endless.

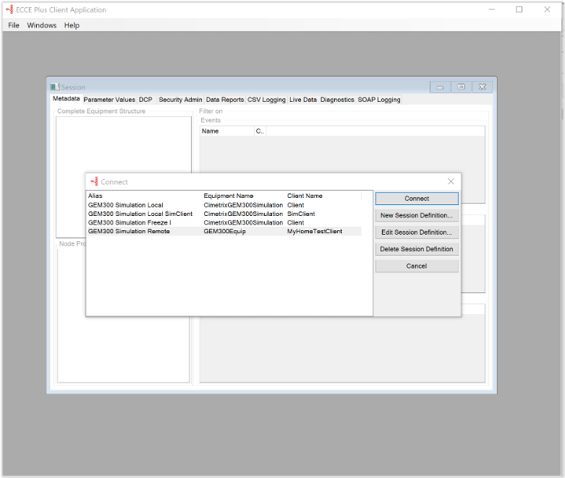

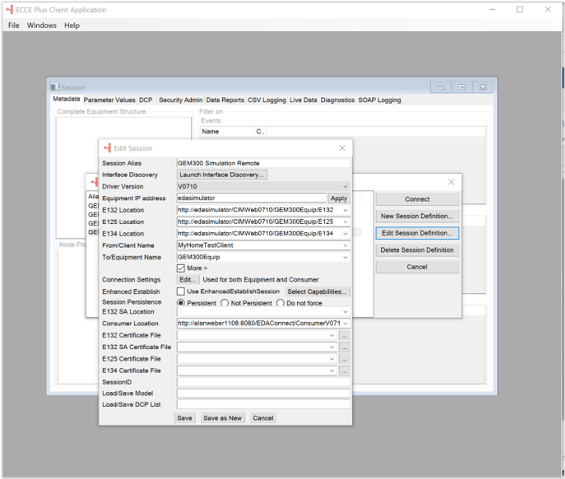

The EDA standards also include a provision for “built-in DCPs” (DCP = Data Collection Plan) which can be shipped with the equipment and protected from accidental deletion at the factory site. These DCPs could be crafted by the equipment supplier to directly feed whatever value-added applications the supplier chose to develop, whether these resided on a computer local to the equipment in the fab, on portable computers used by field service engineers to diagnose problems, or on remote cloud-based systems allowed to connect via secure EDA-defined URLs. This flexibility opens up a wide range of application types, from those that embed equipment-specific algorithms to generic Machine Learning frameworks… the possibilities are endless. The COVID-19 pandemic is impacting businesses worldwide, and in many regions, working from home is now mandatory or at least strongly encouraged.

The COVID-19 pandemic is impacting businesses worldwide, and in many regions, working from home is now mandatory or at least strongly encouraged.

The 31st annual APC Conference is now in the history books, and the diversity of topics, presenters, and local distractions made it well worth the visit to San Antonio! This year’s agenda featured half-day tutorials on the basics of APC and cyber-security, keynotes from chip makers and leading suppliers on automotive industry requirements, smart equipment, and smart manufacturing, and a series of packed technical sessions covering sensors and equipment control, fault detection and feedforward/feedback control, advanced analytics, and standards.

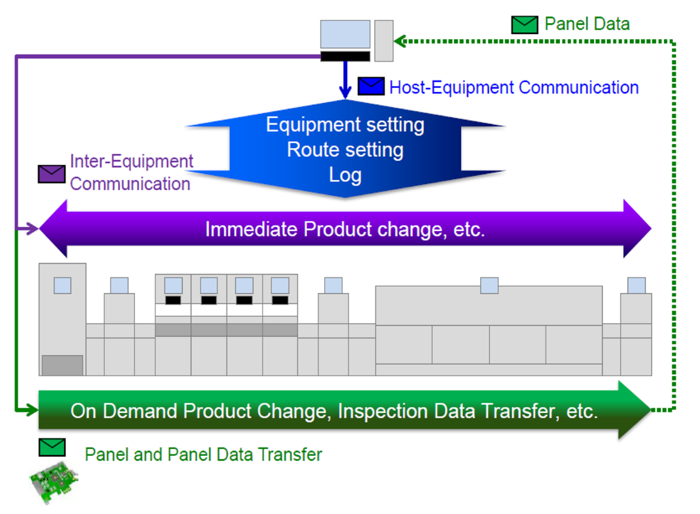

The 31st annual APC Conference is now in the history books, and the diversity of topics, presenters, and local distractions made it well worth the visit to San Antonio! This year’s agenda featured half-day tutorials on the basics of APC and cyber-security, keynotes from chip makers and leading suppliers on automotive industry requirements, smart equipment, and smart manufacturing, and a series of packed technical sessions covering sensors and equipment control, fault detection and feedforward/feedback control, advanced analytics, and standards. The SMT-ELS standard has come a long way in a short time, and the ambitious, integrated demonstration created by 4 major SMT suppliers (Fuji, Juki, Panasonic, Yamaha) that was exhibited in June (Japan) and August (China) will again be shown in productronica (Munich, 13-15 November). The basic functions of SMT-ELS (officially designated at SEMI A1, A1.1, and A2) appear in the figure below.

The SMT-ELS standard has come a long way in a short time, and the ambitious, integrated demonstration created by 4 major SMT suppliers (Fuji, Juki, Panasonic, Yamaha) that was exhibited in June (Japan) and August (China) will again be shown in productronica (Munich, 13-15 November). The basic functions of SMT-ELS (officially designated at SEMI A1, A1.1, and A2) appear in the figure below. Cimetrix will likewise demonstrate this new standard at productronica, showing not only an equipment-level implementation of the M2M features but also the host-based configuration process and a plug-in for doing protocol validation tests.

Cimetrix will likewise demonstrate this new standard at productronica, showing not only an equipment-level implementation of the M2M features but also the host-based configuration process and a plug-in for doing protocol validation tests. If you would like any further information, you can speak with a Cimetrix expert, or you can stop by our booth at productronica this week (Hall A3 booth 451).

If you would like any further information, you can speak with a Cimetrix expert, or you can stop by our booth at productronica this week (Hall A3 booth 451).  This conference is one of the longest-running events specific to the semiconductor manufacturing industry, and always features speakers and topics that are germane to the industry’s leading practitioners of equipment/factory data collection, analysis, optimization, and control. This year’s agenda promises more of the same –

This conference is one of the longest-running events specific to the semiconductor manufacturing industry, and always features speakers and topics that are germane to the industry’s leading practitioners of equipment/factory data collection, analysis, optimization, and control. This year’s agenda promises more of the same –  The old adage “You get what you pay for” doesn’t fully apply to equipment automation interfaces… more accurately, you get what you require, and then what you pay for!

The old adage “You get what you pay for” doesn’t fully apply to equipment automation interfaces… more accurately, you get what you require, and then what you pay for!