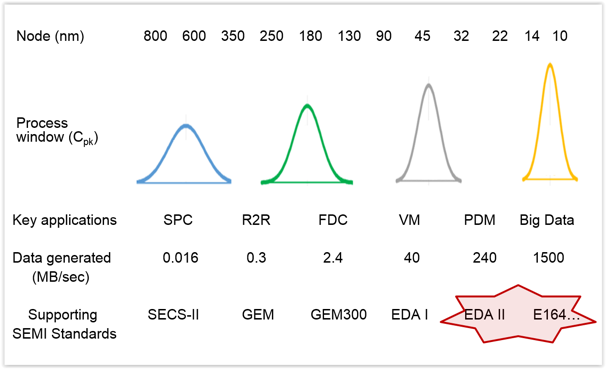

With all the recent news of increased EDA (also known as “Interface A”)adoption, especially in Asia, this is the perfect time to highlight the “Top 10 Best Practices” that semiconductor manufacturing equipment suppliers can follow in planning and executing their implementations of this important suite of standards. As we’ve said in previous blog postings and other EDA-related material, it is best to take a long-term view of your EDA interface design, independent of what a particular semiconductor manufacturer’s automation specifications may initially require. In so doing, you can be certain your implementation will satisfy all future EDA requirements, enabling your control system software team to focus on the features that truly differentiate your equipment from that of your competitors. The information in this posting can give you a running start on this process. It’s important to note that the “best practices” summarized below are the culmination of many years of EDA standards definition, related software product development, and manufacturing production experience among the early adopters. As such, most of them could support a dedicated blog posting, so watch for these in the coming months. In the meantime, if you’re interested in more specifics, please contact us.

1. Build a useful equipment model

First and foremost, since the content of the “equipment metadata model” is effectively the data collection “contract” between the equipment supplier and the factory users, your customer’s ultimate satisfaction with the EDA interface depends on the content and structure of this model. The role most affected by this model is the process engineer, so the equipment component, variable, event, and exception names should match the tool documentation, and the logical hierarchy should mirror the actual hardware structure.

2. Consider non-functional requirements

System performance expectations change over time, and, as a result, the equipment automation requirements may not include sufficient or up-to-date detail in this area. Therefore you must document your assumptions about the performance of your interface in terms of maximum sampling rate, average number of parameters per data collection plan (DCP), total bandwidth required (e.g., 20,000 parameters per second), and other factors important to the customer. In addition to performance, these will include scalability, availability, flexibility, extensibility, and ease of use, among others.

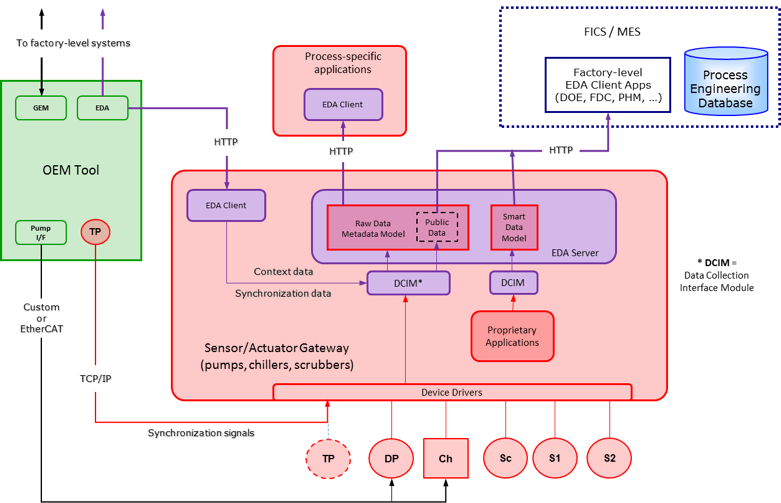

3. Define robust system architecture

The architecture of an EDA interface is greatly affected by the non-functional requirements mentioned in number 2 above, in addition to the specific capabilities required by the SEMI standards. One way to ensure these requirements can be met is to separate the EDA interface software from the equipment controller. In other words, run it on a different computer dedicated to the interface. Moreover, stick with the services and protocols defined by the standard – don’t be tempted to implement custom extensions that will only apply to a specific customer or client application, as this just increases your future support costs.

4. Choose platform with extra “headroom”

Computer hardware is inexpensive compared to the cost of downtime and support, so choose a platform that has room to grow. Based on many years of production experience, Cimetrix can provide specific guidelines in terms of CPU speed, number of cores, memory, disk, and other system attributes. Note that you may also be expected to upgrade these platforms in the field as the standard and/or customer requirements evolve, so plan accordingly!

5. Implement E164 common metadata standards

The E164 “EDA Common Metadata” standards likewise incorporate equipment modeling best practices from many early EDA implementations, so you should consider these as a required baseline for your equipment model, whether or not the first EDA customer calls for them in the automation specs. It is actually easier to do this when developing a new EDA interface than it is to come up with a separate set of structural and naming conventions, but it can be very difficult to implement later. (Note that we have had a number of previous blog postings on this topic.)

6. Use equipment modeling tools

Since typically 75% of the interface development and maintenance time is spent dealing with the content and behavior of the equipment model, these tasks are a perfect candidates for [at least partial] automation via model creation/editing tools and associated “wizards.” These tools should be able to generate an E164-compliant baseline model to which process-specific information can be added naturally. Moreover, if possible, use the resulting tool configuration files to create models programmatically, which will greatly reduce support costs over time.

7. Provide complete visibility into equipment behavior

The principal motivation expressed for EDA adoption by the factory operations people across the industry is “better understanding of equipment/process behavior.” Therefore, to satisfy this need, equipment suppliers should provide as much information as possible about key process variables/events/exceptions, and all the underlying mechanisms (sensors, actuators, I/O, low-level fault conditions) that affect them. Also make sure the E157 “steps” (recipe step-level transition events) are visible and meaningful to enable the kind of fine-grained condition-based trace data collection required by leading-edge fault detection, run-to-run control, and predictive analytics applications. Apply the principle “when in doubt, include it” – your customers will thank you.

8. Build in “hooks” for field service support

An EDA interface can be valuable for your own field support team if the proper “hooks” are included in the model from the outset. These capabilities range from a simple “sniff test” (Is the interface up and running?) to complete recent history of the platform’s operating conditions and the EDA clients’ demands on the interface. An explicit logging strategy should also be defined and documented to enable the factory customers to do their part in getting you the information required for prompt, one-pass success in support situations.

9. Develop thorough test plans and use them

In addition to the range of test techniques expected for mission-critical software (unit, system, regression), EDA interfaces should be subjected to performance and stability testing as well. Most customers will also require standards compliance and other acceptance tests to be run, and results provided before and after delivery of the equipment. Where possible, industry accepted packages are preferable for this purpose.

10. Use proven commercial software

Last, but not least, you should heed the advice of race car drivers, test pilots, and stunt men who regularly caution their audiences “Don’t try this at home!” The related message for interface developers is that the EDA standards, while mature and well documented, are complex, moving targets that require significant expertise, time, and effort to understand and implement reliably. For most equipment suppliers, this resource is far better spent building features that differentiate the equipment, and relying on companies with proven track records to provide off-the-shelf interface software products that minimize both time-to-market and project risk.♦

We sincerely hope this material is useful to you, and feel free to contact us for more information.